Similar Articles

CRM vs. Rodin Gen 1 : Exploring the New Frontier in 3D Modeling 🗻

6/2/2025

A glance to Rodin Gen-1.5

6/2/2025

Rodin Gen-1, the best 3D Generation AI ?

6/2/2025

UI/UX in the Matrix: Surviving and Thriving in AR/VR and Game Design

6/28/2025

They Said It Would Burn: Tales from the Trenches of PC Enthusiasm

7/15/2025

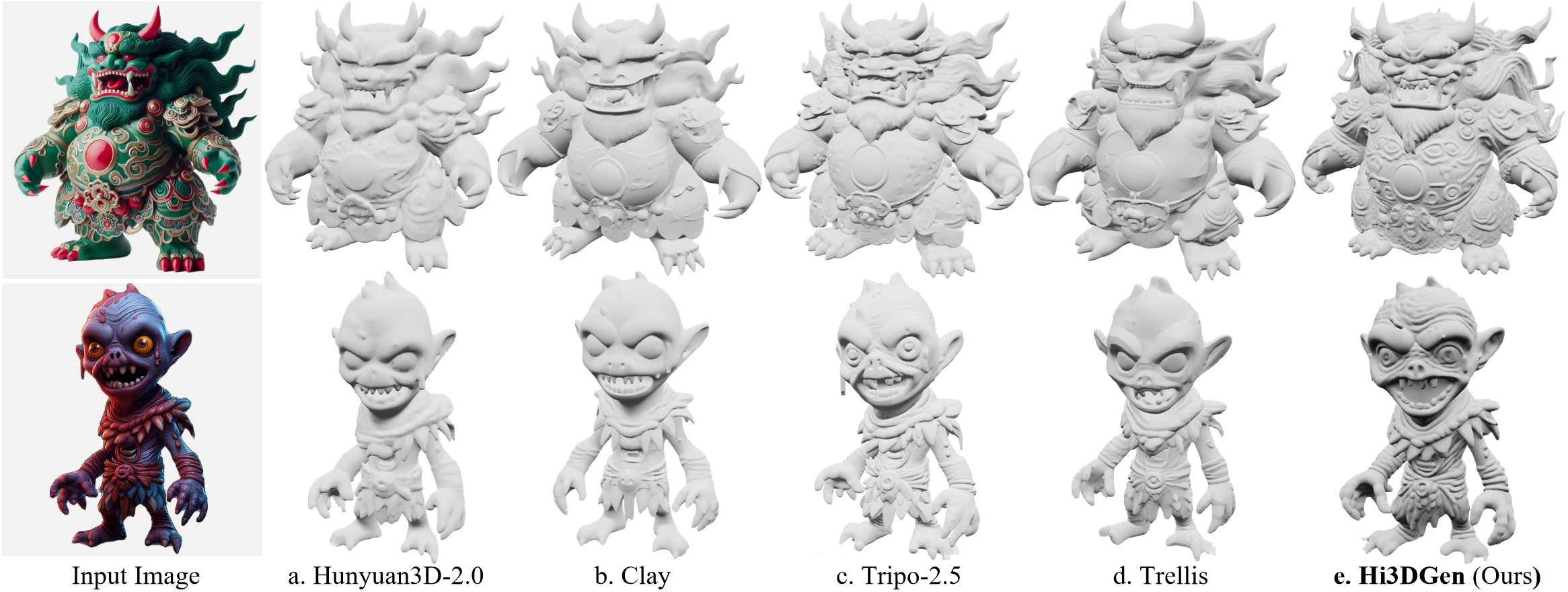

Hi3DGen vs Hunyuan 3D-2.5

The AI race for 3D model generation just got intense.

Looking to turn a single image—or a few words—into a clean, printable, textured 3D model?

Welcome to 2025, where AI now creates your assets faster than your art team can schedule a standup.

Two names dominate this new frontier: Hi3DGen (ByteDance) and Hunyuan 3D-2.5 (Tencent).

One focuses on geometry and fidelity, the other on full textured pipelines. Both are wild.

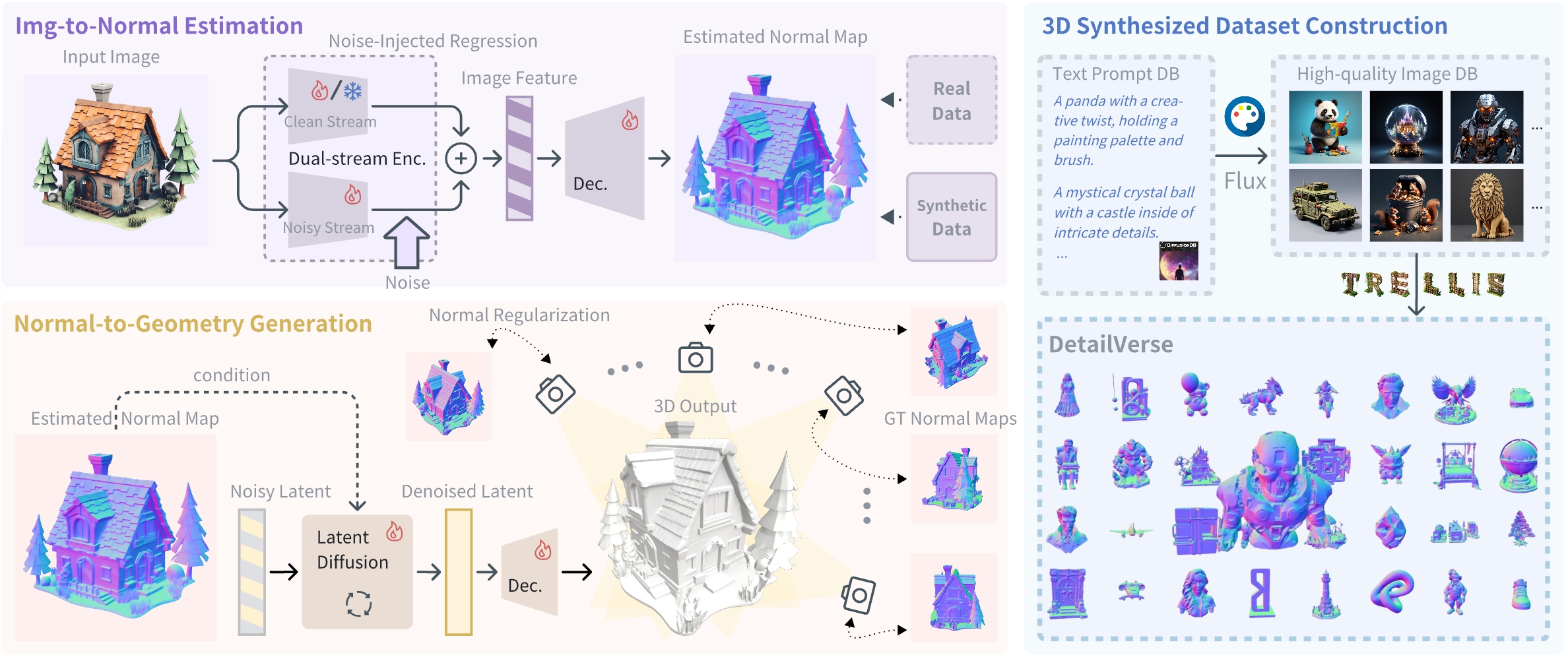

🧠 Hi3DGen: Geometry-first, Texture-free, Detail-obsessed

Hi3DGen is a research model from ByteDance’s Stable-X team.

It uses a normal prediction stage, then feeds that into a latent diffusion model to reconstruct precise 3D geometry.

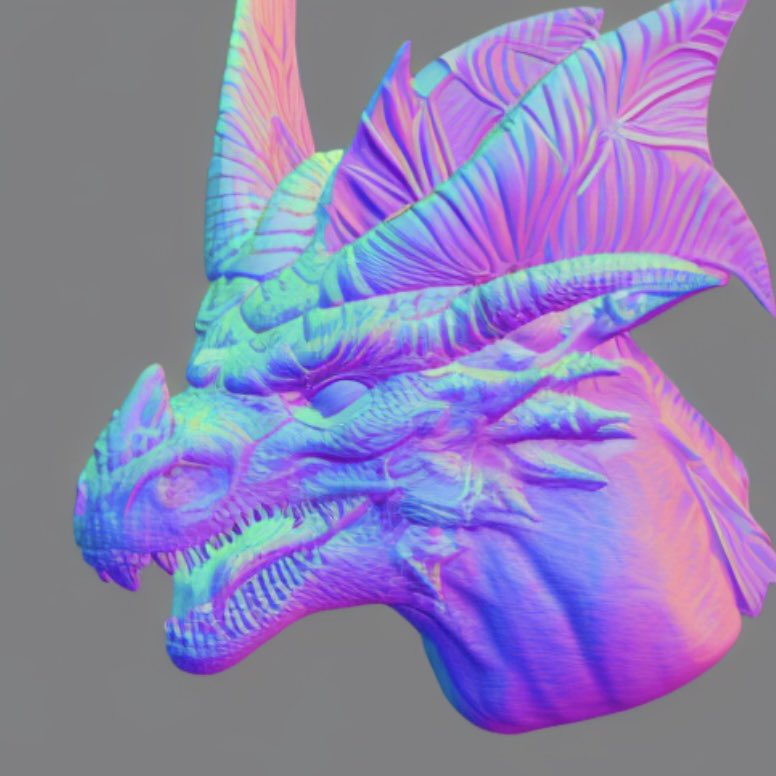

No textures. No fancy PBRs. Just crispy edges, top-notch normals, and clean topology.

If you care about printable, sculptable, or high-fidelity meshes, this is the one.

➡️ Try the online demo (HuggingFace)

📢 Full code will be open-sourced in April 2025

🧱 Hunyuan 3D-2.5: From prompt to textured model in 20 seconds

Hunyuan is Tencent’s all-in-one 3D generator. Input a text prompt or an image, get a fully textured 3D model with PBR maps, normals, and UVs. It even supports stylized characters.

The pipeline:

- Geometry: Hunyuan3D-DiT (Diffusion Transformer)

- Textures: Hunyuan3D-Paint

- Output: .obj/.gltf with 1024x1024 textures, AO maps, normals, and UVs

Perfect for:

- Game prototyping

- Virtual product design

- Fast concept-to-asset workflows

➡️ Try the official demo (limited free trials)

🧠 Code repo (Apache 2.0)

Note: Not available from the EU (regulatory restrictions)

🧪 Which one should you use?

If you want clean, accurate geometry for printing, sculpting, or manual texturing: Hi3DGen.

If you want an all-in-one asset (textures + UV + ready for Unity/Blender): Hunyuan 3D-2.5.

Hi3DGen feels like an artist’s base mesh assistant.

Hunyuan feels like a game dev intern on crack. (In a good way.)

🧰 Already used in production?

Yes, and not just by indie hackers.

- Tencent Games uses Hunyuan internally for prototyping and concept assets

- ByteDance’s Stable-X team collaborated with VFX artists to optimize mesh fidelity

- Artists on Reddit and HuggingFace are already posting high-detail busts and figurines from Hi3DGen

A few models have even been 3D printed already, and the results are surprisingly clean.

🛠️ Roadmap & Next Versions

- Hi3DGen will be open-source in April 2025. Textured variant rumored.

- Hunyuan v3.0 incoming (Summer): simplified topology, rigging-friendly output, GLB export.

- Plugins for Blender & Unity under development.

- Meta & OpenAI are working on video-to-3D—expect chaos soon.

✨ Bonus: Other notable contenders

- Rodin (Gen 1.5)

Not public, but in private tests. Excellent surface quality.

-

Trellis

Academic image-to-3D, slower and less detailed than Hi3DGen, but stable. -

CLAY

Focused on stylized characters, popular in animation pipelines.

🏁 Final Thoughts

In 2025, AI 3D gen isn’t science fiction anymore—it’s a tool.

Hi3DGen and Hunyuan aren’t just toys—they’re actual weapons in the hands of developers and artists.

Your choice depends on whether you need:

- Raw fidelity with artistic control → Hi3DGen

- Fast, usable assets with textures → Hunyuan 3D-2.5

If you’re not experimenting with these tools yet, you’re already late.